Hero image generated by ChatGPT

This is a personal blog and all content herein is my own opinion and not that of my employer.

Enjoying the content? If you value the time, effort, and resources invested in creating it, please consider supporting me on Ko-fi.

The Unseen Variable: Identity, Agentic AI and the Path of Least Resistance

Every few years the industry rediscovers a truth that has always been hiding in plain sight. We rename it, formalise it, and publish new frameworks around it, but the core idea remains the same. In distributed systems, adversarial systems, and economic systems, the path of least resistance always wins .

That idea has been rattling around my head again as I have been reading the Microsoft Ignite Book of News and watching the emergence of the Agent Control Plane, Agent ID, Agent 365, and Conditional Access for agents. In many ways it feels like a genuine step forward. It treats agentic workloads as first-class citizens in identity. It gives them a consistent control plane. It recognises that AI-infused automation is not a bolt-on but a structural shift. And it finally acknowledges that machines need security policies just as much as humans do .

At the same time, I cannot shake the sense of déjà vu. I remember when workload identities were meant to solve the unbounded sprawl of secrets and client credentials. I remember when Conditional Access was meant to close the door on unmanaged devices. I remember when moving identity to the cloud was meant to simplify security, not fragment it behind licence SKU boundaries.

I keep coming back to the same thought. Just because you build a strong control plane for one class of identity does not mean attackers will use that plane. Capability does not equal obligation. Attackers will not follow the design assumptions of the product team. They will follow the path of least resistance, and that path is rarely the one with the most documentation .

At the same time, the broader AI story being sold by vendors leans hard in the opposite direction. The message is simple and relentless: AI can do everything for you. It can write the code, build the workflow, configure the environment, debug the errors, wire up the APIs, and keep running in the background so you do not have to. That is exactly the behaviour pattern an attacker would want, whether the attacker is human operating through the AI, or the AI itself is acting semi-autonomously in response to prompts and goals.

This is where Graham’s Razor (my own personal razor, that is absolutely not of my own creation but something I try to adhere to where possible) comes in for me.

Capability does not equal obligation. Just because you can, does not mean you should .

Or, in more adversarial terms: why would you do that? Because I can, and because you did not expect it. It is the Ian Malcolm problem in Jurassic Park all over again.

The industry is so focused on whether we can make agents act on our behalf that it has not really stopped to ask whether they should be able to act in all of the ways we are now enabling .

As agentic systems scale, that tension matters a great deal more than most organisations currently realise.

This post is an attempt to lay out why.

It is long. It is dense. It is probably not something I should write after midnight after reading too much Ignite marketing. But it captures something I think the industry needs to confront before we drift into another unplanned era of identity debt.

Where I mention Microsoft here, that just comes from where I have the most identity experience. I do try to compare with other major CSPs with the same, similar, or different blind spots, but in many cases you can do a find and replace with $vendor of your choice.

If this is a hit piece on anyone, it is a hit piece on everyone .

I am also keeping the focus more tightly on cloud-native identity. Adding in hybrid identity with traditional on-premise directory objects (AD, LDAP etc) synced or federated to the cloud IdP adds horrible complexity and while that is a reality for a large number of businesses today, the reality is we are not learning. Even in modern cloud-native environments with no legacy on-premise technical and security debt, this is a clear and present danger already and we are sleepwalking into a potential nightmare.

It is also not intended for me to be an AI naysayer. I use it myself and have found that while it can be frustrating and plain wrong at times, it can also be incredibly useful as an accelerator and force multiplier to help you keep up with the insane firehose of change we live with, as long as you have suitable domain knowledge to spot where AI is hallucinating and gaslighting you and stroking your ego all at once.

Let us just say my “Responsible AI” and the industry’s “Responsible AI” are not 100% in sync…

That term is on the slippery slope to where I feel Shared Responsibility Model has ended up being transformed by the industry into a de facto false dichotomy.

Identity Is Fracturing Faster Than It Is Consolidating

For more than a decade the industry has talked about identity as the new perimeter. The underlying shift was real, even if it’s become a bit of a cliché. The things we now authenticate are not limited to people, devices, or even traditional workloads. They include ephemeral compute, microservices with lifespans measured in seconds, autonomous agents operating inside productivity suites, serverless workflows acting on behalf of other workflows, and increasingly AI-driven processes that chain together actions across multiple vendors and multiple identity systems.

Microsoft’s recent announcements about Agent ID and the Agent Control Plane are a recognition of this reality. They signal the beginning of a world where agents inside Microsoft 365 are treated as discrete, authenticated, policy-bound entities. They represent an extension of the identity model we have for humans into the non-human domain.

This is a good thing. I want to be very clear about that.

But there is a category error lurking underneath everything. We are treating agentic identities as if they are the natural successors to workload identities. In practice they are an addition to them. A new surface on top of the old surfaces. An overlay on top of an already complex landscape that was never fully secured in the first place.

Enterprises today often have tens of thousands of Service Principals, Managed Identities, OAuth apps, automation accounts, Terraform identities, CI/CD pipelines, configuration agents, integration connectors, and innumerable pieces of glue logic that authenticate in ways nobody has fully catalogued. Even in well-governed environments, the long tail of identity debt is enormous.

Adding a new class of identities on top of that does not eliminate the underlying problem. It makes it more complicated. The direction of travel is not toward consolidation. It is towards continued multiplication. And each new identity class brings its own control plane, its own default behaviours, its own documentation gaps, its own audit signals, and its own security licence SKU boundaries.

Agentic AI Will Not Attack In The Ways We Expect

I was reading the excellent post “We Built The Kill Chain For Humans. AI Didn’t Get The Memo.” by Rik Ferguson this last week too and it crystallised my thinking here - pulling together observations and half thoughts into a more cohesive mental model for me.

A machine does not care about metaphors like kill chains or ladders of privilege escalation. A machine optimises for reward. It takes the path that yields the highest chance of success with the lowest cost. And it can actually, truly, “fail fast”. It will not attack the part of your estate you are most proud of defending. It will attack the part you forgot existed. And it will find it with alarming speed .

As Rik says (I’m paraphrasing here), when DeepMind created AlphaGo, the most shocking moment was not that it beat top-ranked human players. It was that it made moves the human players had never even considered. It explored parts of the game that humans did not believe were viable. It found advantage not by doing what humans do better, but by doing things humans do not do at all.

This is the analogy that keeps coming back to me. We have spent years strengthening Conditional Access (other mechanisms in other vendors are available) for humans. We are now strengthening Conditional Access for agentic identities. But most enterprises still have no meaningful Conditional Access for workload identities. Not unless they pay for an additional licence SKU per workload identity, per month, in perpetuity. And that is for a fleet of identities that is about to exponentially explode in size. And even if they do, the feature set is still maturing and does not match the controls available for humans.

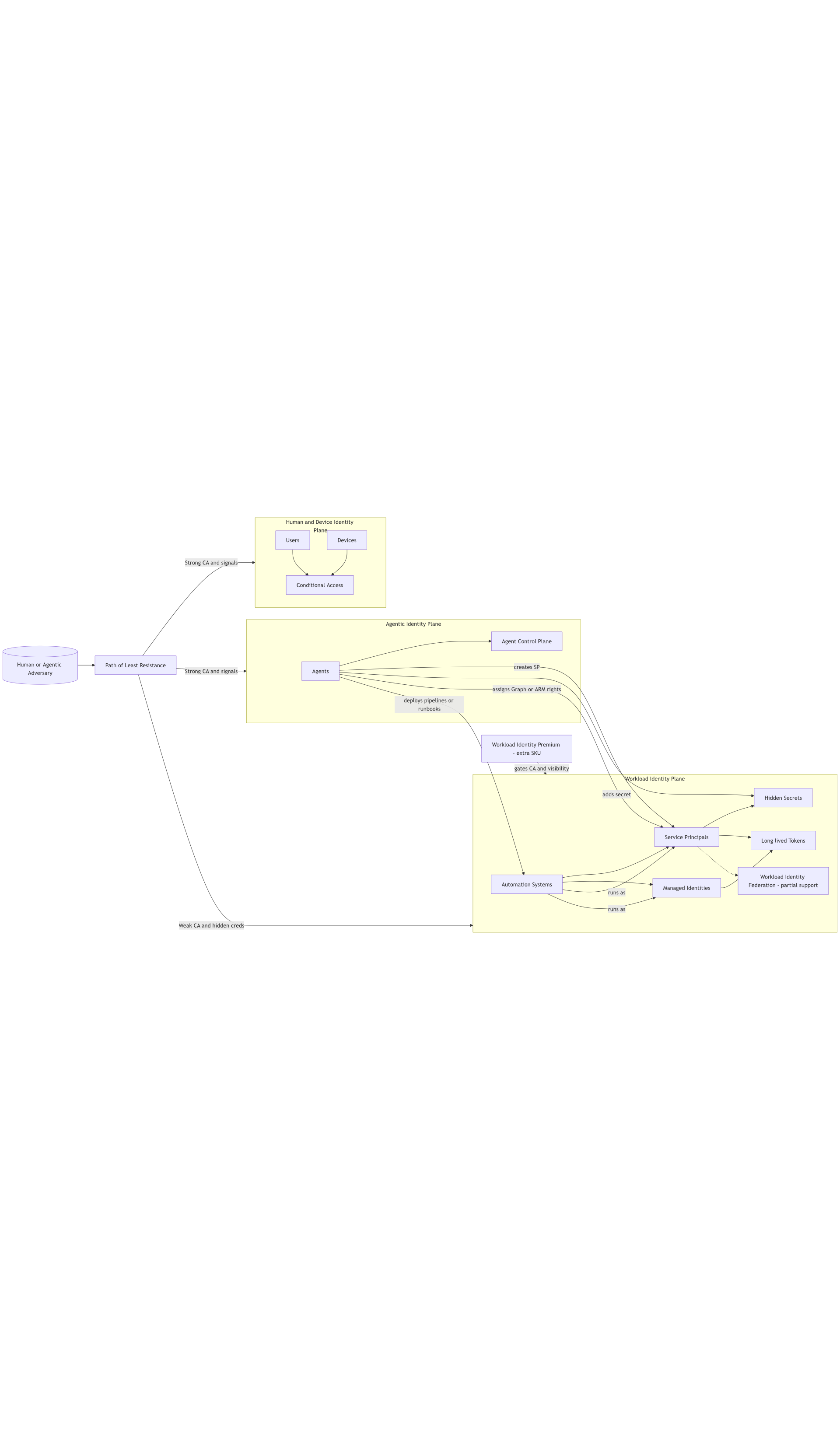

So what happens when an attacker, human-controlled or autonomous, simply decides not to attack the human or the agent? What if they choose instead to use the workload identity that nobody has protected, or the one with a hidden secret added by API, or the one created programmatically two years ago during a migration and never cleaned up?

The answer is predictable. The attacker will succeed.

It is always easier to walk through the open door than to break the locked one .

What makes this more uncomfortable is the realisation that an agent does not have to remain “inside” the agent plane to cause problems. Nothing stops a sufficiently empowered agent from:

- creating a Service Principal

- adding a client secret or certificate

- granting it Graph or ARM permissions

- deploying an Azure DevOps pipeline, Logic App, Function App, or Automation Account that uses that identity

- scheduling it and then stepping back

From that point on, Entra is very unlikely to see “agentic activity.” It sees a Service Principal or Managed Identity doing exactly what it has been configured to do. The provenance of the initial configuration decision lives in one place. The ongoing operational blast radius lives in another. The agent has effectively used the workload identity plane as an escape hatch from its own control plane.

Workload Identities: The Forgotten Third Pillar

To understand why I care – and why I implore you to care – we need to look directly at the uncomfortable truth. Most organisations might secure two classes of identity well (in theory, your mileage may vary…): Humans and Devices. Everything else is variable at best and weak at worst.

This is especially true in Entra ID, where workload identity protection is split across multiple licence SKUs. Entra ID P2 gives you Conditional Access for humans and now for agents. Workload identities, however, require an additional licence layer for proper governance, monitoring, and policy enforcement. Many customers never purchase it. Even in those who do, full adoption is uncommon.

The more I look at this model, the more Workload Identity Premium feels misaligned with the world Microsoft is now building. It prices workload identity protection as an optional extra, while simultaneously positioning agents and automation as core to the future of the platform. Agents sit on top of Service Principals and Managed Identities. They call APIs and orchestrate changes through those identities. If workload identity protection is optional or partial, agent protection is only ever partial in practice, no matter how sophisticated the Agent Control Plane may become.

There is also a subtler asymmetry emerging. Early agent telemetry in my tenant suggests that Conditional Access for agents can apply to an agent’s use of a workload identity. When the agent calls through a Service Principal, you can in principle see and govern that usage as agentic. But when that same Service Principal is used directly by Azure DevOps, by an Automation Account, by a Logic App, by a Function App, or by any other caller, it is no longer in the agentic path at all. The identity is the same. The policy surface is not.

This is an economic observation. When you add a per-workload licence to a feature area:

- Customers will not buy it at the scale it is needed.

- Security controls will be inconsistent.

- Coverage will be partial.

- Attackers will target the uncovered portion.

Those uncovered portions are usually the identities that matter the most, because they are the ones holding real operational power.

This is the same pattern we saw with privileged access in the on-prem era. Organisations invested heavily in protecting human domain admin accounts, but left the machine accounts with unconstrained delegation untouched. Or used built-in groups rather than delegated permissions because software vendors told them to use Domain Admins, Account Operators to ease the installation and adoption of their product and reduce their support overhead, rather than doing it properly.

Attackers simply pivoted sideways, if they even needed to pivot at all.

Identity is repeating that pattern at cloud scale.

The Path of Least Resistance: What The System Optimises For

Workload identities are not just the least licensed part of the identity estate. They are also the deepest part of the technical debt stack. The following technical realities represent the low-resistance characteristics an AI attacker will prioritise.

Hidden Credentials and Legacy API Surfaces

One of the clearest examples is the issue of hidden client secrets. Secrets added to the service principal object via Graph API are not visible in the Azure portal. The portal only surfaces credentials on the application object, not the service principal. This distinction is subtle but critical.

The only reliable evidence of the credential addition lives in the audit logs or API calls. If those logs are not exported to an external SIEM or reviewed regularly, the secret could persist indefinitely.

An attacker who adds a secret via an API call to /servicePrincipals/{id}/addPassword or via offensive frameworks gains a credential that:

- appears instantly in the backend

- works immediately for authentication

- may not appear in the GUI

- leaves audit traces that can be lost if logs are not exported

- does not trigger Conditional Access or MFA

This is a dream scenario for an attacker. It is precisely the type of control gap that an AI optimiser would gravitate towards. It is not a theoretical issue. It is a documented behaviour with multiple public sources. And this is only one blind spot. There are others: inconsistencies between Entra ID and legacy Azure AD Graph behaviours, and disparities between how Conditional Access applies to user flows versus service principals.

Tokens: Longer Lived Than We Pretend

The industry often talks about tokens as short-lived cryptographic artefacts. This is true in theory. In practice, token lifetimes vary significantly, and are often far longer than the public perception suggests .

Microsoft Entra ID’s documentation describes default refresh token lifetimes of up to 90 days, with access tokens typically valid for around 60–90 minutes depending on client and resource configuration.

AWS STS allows temporary credentials with durations up to 12 hours for some roles.

Google Cloud documents service account OAuth access tokens as one hour by default, while service account keys remain valid until rotated or revoked.

These are not unusual. They’re the defaults across major platforms.

Token lifetime behaviour matters because an attacker who compromises a token often gains a window of opportunity long enough to cause material damage. When the attacker is an agentic system with unlimited execution speed, a 90 day refresh token is effectively a durable foothold.

Some organisations mitigate this through Continuous Access Evaluation (OpenID Continuous Access Evaluation Profile), Conditional Access session controls, and real-time revocation. But these controls do not uniformly apply to workload identities or to the full spectrum of federated identity flows. This is the exact kind of discrepancy an AI attacker can exploit.

Token Protection Is Still Weak, Inconsistent, and Fragmented

Everything above becomes more uncomfortable when you look closely at how access tokens and refresh tokens are actually protected in real-world systems.

The industry has spent years talking about token theft, token replay, and the need for cryptographic binding. We have standards like OAuth 2.0 Demonstration of Proof-of-Possession (DPoP) and MTLS-bound access tokens. We have vendor-specific mechanisms such as Microsoft’s token binding features, Google’s workload identity federation constraints, and AWS’s session token integrity checks. On paper, this looks healthy .

In practice, token binding is still the exception rather than the rule.

Most workloads today authenticate using tokens that are:

- bearer tokens, not proof-of-possession tokens

- valid for long periods (minutes, hours, or refreshable for months)

- not tied to a device, host, key, workload, or calling context

- highly portable between execution environments

- easily replayed if intercepted or extracted

- accepted by downstream APIs without verifying the integrity of the calling channel

This is the exact scenario an AI attacker would exploit, because bearer tokens obey the same optimisation logic attackers follow:

If you have the token, you are the identity. And if the token is not bound to a context, you can replay it anywhere .

The Fragmentation Problem

Even when cryptographic token binding is supported, it is:

- optional

- inconsistently implemented

- inconsistently enforced

- not uniformly available across all identity types (especially workloads)

- rarely used by third-party libraries and SDKs

- poorly integrated into legacy services

- expensive or complex to retrofit into existing applications

DPoP is available for some flows but not for others. MTLS token binding is available in some environments but impractical for many multi-cloud or serverless designs. Workload Identity Federation can reduce secret sprawl, but still results in bearer-style tokens that remain replayable unless additional layers are applied.

The result is predictable:

- Developers default to what works everywhere: bearer tokens.

- Security teams cannot enforce strong token protections uniformly.

- Attackers will target the weakest link in the token path.

Weak Secure Coding Practices Amplify It

Even major vendors still emit sensitive tokens or session keys in:

- URL query parameters (CWE-598)

- logs

- browser history

- redirect URLs

- misconfigured callback handlers

- client-side JavaScript variables

- network requests lacking secure headers

OWASP has warned against placing secrets in URLs for more than a decade. Yet it still happens regularly, including in cloud control plane redirection flows, OAuth integrations, and vendor SDK behaviour.

For an AI attacker – or a human using an AI assistant – token harvesting becomes a matter of:

- observing query parameters

- scraping browser logs

- intercepting server-to-server calls

- exploiting verbose debugging output

- scanning code repositories

- collecting short-lived tokens and replaying them faster than a human could

It is not sophisticated. It is not elegant. It is not clever.

It is simply cheap.

And in adversarial systems, cheap almost always wins.

Fragmented Adoption of Workload Identity Federation

Workload Identity Federation (WIF) is one of the most important modern identity safeguards, eliminating static secrets. In principle, this is a major improvement.

In practice, WIF adoption is uneven. Microsoft’s workload identity federation docs explicitly note that not all services support federation today. AWS and GCP make similar distinctions between what can and cannot use OIDC or external IdPs for workloads. That nuance rarely makes it into high-level architecture diagrams, but attackers will absolutely honour it.

This fragmentation matters because:

- Not all workloads can move to WIF even if the organisation wants to.

- Many workloads require mixed authentication patterns due to partial support.

- Multi-cloud workflows often end up with a mixture of WIF and traditional secrets because bridging layers do not support full federation end to end.

The net result is that even mature organisations end up with a patchwork of WIF-based workloads, secret-based workloads, certificate-based workloads, and federation patterns that require fallback to password-based service principals. This messy reality is what attackers see. And there is no guarantee that partial federation adoption reduces risk if the non-federated paths remain accessible and unmonitored .

What Happens When AI Starts Using Workload Identities Intentionally

Let us imagine a future agentic attacker. Perhaps not a fully autonomous entity, but an agent that can reason about identity surfaces, read documentation, issue API queries, and chain its own actions.

The agent begins by enumerating the identity types in the target tenant.

It discovers user identities with Conditional Access, strong MFA, behavioural analytics, and device requirements. It discovers agentic identities that carry Agent ID, have first-class policy support, and feed into the risk engine.

Then it discovers workload identities with minimal governance.

It sees some with certificate credentials and some with client secrets. It sees that some of those secrets have been added via API. It sees that some app registrations have permissions far beyond what is needed. It sees that Conditional Access is not applied to service principal flows. It sees that there are no risk signals for credential-based workload authentication.

The agent chooses its path.

It adds a new client secret to a high-value service principal using a Graph call. It authenticates silently. It enumerates all objects the service principal has access to. It laterally escalates to other workloads. It discovers automation pipelines that run with privileged identities. It steals the secrets or tokens those pipelines use. And it begins to operate entirely below the agentic and human identity planes.

To defenders, the activity looks like a normal workload executing normal tasks. There are no behavioural anomalies. No compromised sessions. Nothing that triggers the risk engine. The attacker has bypassed the entire human–centric identity security model.

The attack path is not clever. It is simply the cheapest.

In adversarial systems, cheap almost always wins.

Hyper Convergence and Systemic Fragility

The more the economy depends on cloud providers, the more the economy inherits their failure modes. And not just their outages but their security debt.

Picture a world where an AI-driven attack targets workload identities in a major cloud provider at scale. Perhaps through hidden credentials. Perhaps through inconsistencies in token flows. The technical feasibility is not the point. What matters is the systemic impact.

If a major provider had to rotate, revoke, or invalidate large numbers of workload identities simultaneously, the cascading failures would be extraordinary. Serverless workflows would stall. Integration pipelines would fail authentication. Microservices would stop talking to each other. Identity-bound storage access would break. Automation would grind to a halt.

This is the kind of systemic fragility that emerges when identity becomes deeply interconnected and loosely governed. It is not that any one vendor is negligent. It is that the entire ecosystem has grown more complex than any single vendor can fully secure.

Add agentic AI to that ecosystem and the fragility compounds itself.

When SPADE Becomes Skynet

A quiet thread running through all of this is the idea of Side-channel Platform Abuse and Data Exfiltration (SPADE) attacks. The core idea was leveraging trusted SaaS-hosted runtimes as covert execution environments.

In the agentic identity era, a similar pattern emerges. An agent with sufficient privileges can: create or modify workload identities, assign permissions, deploy automation that runs under those identities, and chain its own behaviour through those automation surfaces .

The net effect is that the agent has used the workload identity and automation fabric as an off-platform control plane.

This is architectural Skynet. Not the cinematic version, but a cloud-native pattern that can:

- create identities

- grant those identities power

- deploy and update code under those identities

- schedule that code

- and keep operating in ways that no longer look like “agent” activity at all

It does not need to be sentient or malevolent. It only needs to follow the optimisation path we have given it: do more, automate more, integrate more, and recover from errors without asking permission .

Vendor Diversity Compounds the Challenge

Microsoft is only one part of the picture. Every major cloud vendor has identity surfaces that contain similar inconsistencies. AWS has: IAM roles with long-lived delegation chains, role chaining behaviours that can surprise even experienced architects, and credential lifetime variability across services. GCP has: service account keys with no inherent expiration, and inconsistencies between human and service account governance.

An AI attacker is not limited by vendor silos. It is not slowed by unfamiliar terminology. It is not confused by documentation that assumes a human reader. It merely treats all identity systems as a graph of capabilities, permissions, and behaviours. If it finds a weak node, it will exploit it regardless of which vendor that node belongs to .

No single vendor can solve this alone. Even if one platform achieves perfect identity governance, the cross-vendor identity links will still create uneven coverage. Attackers will go to the vendor with the weakest identity controls, compromise that vendor’s identities and then pivot into the stronger platform using trust relationships. Identity is an ecosystem problem .

Where This Leaves the Security Community

I do not believe the situation is hopeless. But we do need to confront the reality that our frameworks, controls, and assumptions are still deeply human-centric.

We must treat workload identities as first-class citizens in the same way we have treated humans and, now, agents. We need to remove licence SKU barriers that make least privilege optional rather than standard. We need consistent policy enforcement across human, agent, and workload flows. We need audit signals that do not depend on portal visibility. We need unified identity provenance and token binding that applies across all authentication types. And we need resilience planning that assumes identity compromise at scale, not just for one user, but for thousands of workloads simultaneously .

The next 5–10 years will prove whether we can secure the perimeter for machines that move faster, reason differently, and take paths we have not anticipated.

The path of least resistance is indifferent to intention. It will lead wherever the system’s structure makes it easiest to go. Right now, that path runs directly through workload identities.

If we strengthen those paths today, we can prevent the worst outcomes tomorrow. If we ignore them, the system will correct us in ways we will not enjoy.

The choice is ours.