Image by Guille Álvarez on Unsplash

Enjoying the content? If you value the time, effort, and resources invested in creating it, please consider supporting me on Ko-fi.

In my last post, Grav in Azure part 7 - Backup Solutions, I discussed backup options for a grav blog running on a D1 WebApp instance, and other possible automation opportunities.

I left off on my last post considering the use of Git-Sync to allow not only continuous deployment (which WebApps already provides - it’s how I publish already), but also backup, pushing changed files back up to the git repository (for example, if I were to write posts in the Grav Admin console, or upgrade the grav software from the admin console).

I did however stumble onto a problem with this:

Git-Sync only syncs folders underneath the user folder, and nothing above such as system, logs etc.

What this means is that while I can happily write content in the Admin console and have it sync’d back up to the repo - I can’t do the same for grav software/plugins etc - updating them from the Admin console will trigger Git-Sync but in doing so it first pulls down what’s in the repo - essentially undoing the software upgrades! It also still means I can’t backup grav’s logs should I want to access them.

So what next?

I explored several options to provide a backup capability I was happy with - remember that this is an experiment and excuse to learn more Azure - so there’s not a design going in, it’s a voyage of discovery.

You may recall in my last post, I pondered a WebJob which would invoke Grav’s own backup command to take a backup of the entire site, and then move the backup offsite as it were, to more resilient storage (the plan was to move it to Azure blob storage and remove backup file from the site itself).

I developed a Powershell script that would do exactly that:

- Use Managed Service Identity (MSI) to access secrets from Azure KeyVault (storage account key)

- Invoke Grav backup command to backup site to a zip file

- Invoke AzCopy to copy the zip file to Blob storage

- Remove the zip file from the backup directory of the webapp content

Now, as you’ll recall, we’re running on a D1 Webapp instance for the live site, and F1 for testing. A consequence of using those lower tiers is that not only can you not use the built-in backup functionality, but while you can have a WebJob, you can only have it be manually triggered - you can’t schedule it to run automatically.

Now, one of the driving forces in my career has been a determination to always find a solution and, frankly, to never let a computer beat me!

So, I first explored using Azure Batch, and after some time messing around with it, discounted it for a couple of reasons:

- Azure Batch doesn’t yet support MSI, and I’m quite keen on using that where possible rather than mess around registering applications in Azure Active Directory.

- Cost - even using low-priority compute nodes instead of dedicated nodes, Azure Batch was going to cost more for simply running a webjob once a day, than I felt fit with the ideal here to optimise/minimise cost. Partly this is the compute resource cost (dedicated nodes consume resource and accrue cost even when batch jobs aren’t running).

Hello Logic Apps!

So, after some research into Kudu, I opted for the following (and I’ll blog on how to set this up in a future post - which won’t be part of the Grav in Azure series).

- Create an Azure Logic App with a scheduled trigger - 05:30am daily - Performs a lookup of the kudu deployment credentials from Azure KeyVault using HTTP API calls. - Parses the credentials from the JSON responses - Calls the Kudu WebJobs API to run the webjob for the website backup

- Setup a Lifecycle Management policy in Blob Storage to ensure backups older than x days is automatically deleted, to curtail storage costs (you can also do other things with Lifecycle Management policies, such as move files to another tier, from Hot -> Cool -> Archive)

I also wanted an automated process that, when I deploy new content, will ensure my cloudflare cache for the site is automatically cleared and then re-populated (so that visitors always get the most up-to-date content, from Cloudflare, quickly).

I initially looked at a custom deployment script - but discounted that because I don’t want the cache to be cleared whilst the deployment is in progress - the last thing I want is a visitor having to hit the origin in Azure whilst it’s mid-build and showing a “site under construction” page - remember we have a single webapp instance here.

I then figured I’d use Kudu Post Deployment scripts - these only run once deployment completes and are simple to set up - simply drop a Powershell or NT Batch script of supported type into a particular directory.

However, I quickly found that the powershell I developed locally didn’t work as expected when running as a post-deployment script, due to the sandbox that it runs in.

Another hurdle jumped using the Power(shell) of Function Apps - logically!

After some more research, I found that Kudu has yet more tricks up it’s sleeve - WebHooks.

So, I setup another Logic App that waits for an incoming HTTP request and registered the POST URL for it with Kudu. Now, when Kudu for this webapp completes a deployment (E.g. me pushing a new blog post up to the git repo), once that’s complete it will make an HTTP call to my Logic App.

The Logic App then makes a call to an Azure Powershell Function, developed from the Powershell script I’d already written.

So it flows as follows:

- Deploy content

- Kudu Post-Deployment webhook triggered

- Kudu calls Logic App

- Logic App calls Powershell Function

- Powershell Function (MSI enabled) retrieves necessary secrets from KeyVault (Cloudflare API auth keys, zone keys etc)

- Powershell Function calls Cloudflare API to purge cache

- Powershell Function retrieves sitemap.xml file from site (from Cloudflare, which in this case pulls it from origin in Azure as cache is empty)

- Powershell function retrieves content of each page in the sitemap - essentially caching all those pages and their assets (images, javascript, CSS etc).

Again, I’ll post in more detail on the post-deploy Logic App/Function App later but a few quick points on using these:

- Easy to setup, and Logic Apps in particular require no coding - can all be done in a GUI in the Azure Portal.

- If you have webjobs you want to run on a schedule, on a WebApp on an F1 or D1 tier, it is much less expensive to trigger using a Logic App than setup an Azure Batch environment.

- Azure Powershell Functions are awesome!

So, in summary then…

Yes, you can build a responsive, and reasonably resilient blog in Azure, at minimal cost.

However, the major enabler for me in being able to do so was Cloudflare. Without that, I’d have needed to increase cost significantly, with a higher tiered webapp instance (in fact, several of them), Azure Traffic Manager, Azure Redis Cache etc.

There are some trade-offs in using a lower-tier (less expensive) web app - not the least of which is that I know I don’t have quite as much resilience as a production site should have.

There are however lots of features of Azure that allowed me to mitigate the limitations of my cost optimisation mindset - the combination of Kudu, Logic Apps, Powershell Functions and Managed Service Identity are very powerful from an automation perspective!

And in all of this, if I find that I need better resilience and scaling options - I can do so without starting all over again - changing tier is minimally disruptive (and with cloudflare on the front-end I can hide most, if not all, of that).

However, mission accomplished - I achieved all of what I wanted to, learned a lot, and have scope to scale if indeed I need to (e.g. if this blog takes off!).

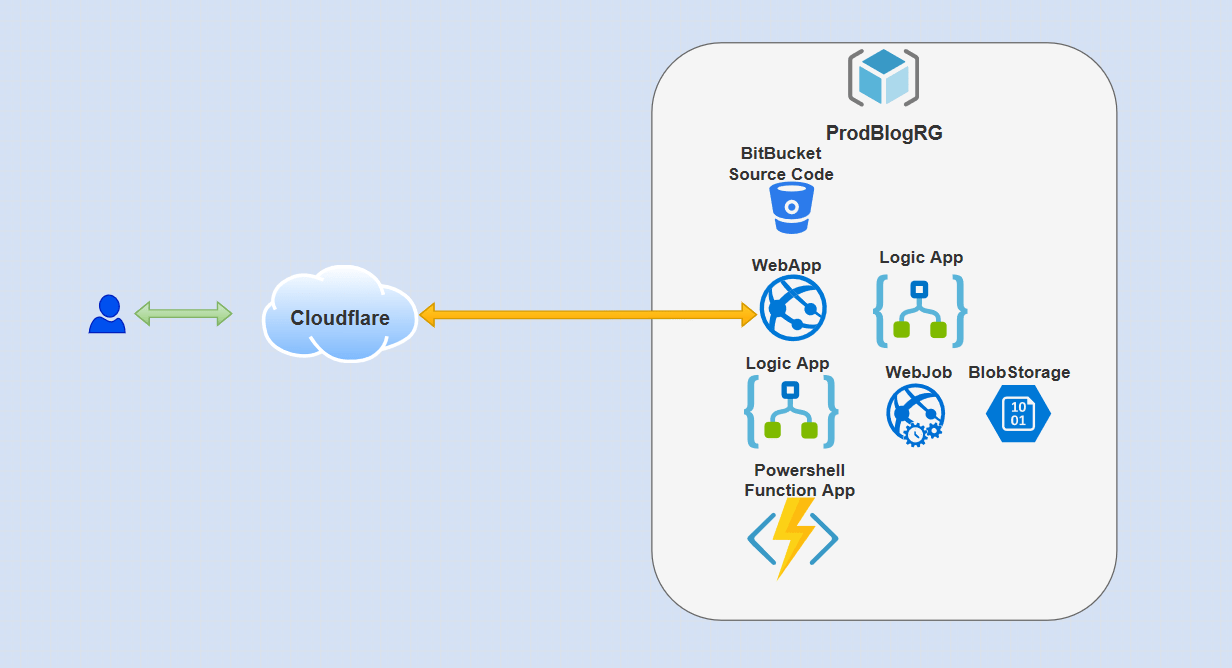

To wrap up - here’s a simple diagram of what I built, and I hope you’ve enjoyed this blog series!

As ever, thanks for reading and feel free to leave comments below.