Image by John Salvino on Unsplash

Enjoying the content? If you value the time, effort, and resources invested in creating it, please consider supporting me on Ko-fi.

From the beginning, the intention was to minimise the cost outlay, whilst still using and learning about Azure and I promised to keep you up to date on my experience, what worked, what didn’t etc.

So, fast forward several months, how has it been?

The Good

At this point I should stress that I have a lot of love for Grav - it’s massively powerful, flexible, extensible and on a decent machine it’s fast and responsive.

Being Open Source Software (OSS), there’s a great community around it and a great ecosystem for Plugins and Themes.

It looks (to me at least) professional, and makes my web development skills look better than in truth they really are (I’m an infrastructure techie and not a developer, so to have a site that looks halfway professional is testament to the underlying solution, rather than my web development skills).

I liked that I could use a web-based editor to write my posts if I so desired - I also like that I can write some markdown and commit to the git repository via my mobile, or on my laptop - plenty flexibility in my publishing workflow.

The Not So Good

This isn’t really meant to be me having a go at Grav - it’s a superb CMS and I think compared to its competitors, you get far more bang for your buck as it were, in terms of performance and functionality vs cost.

However, I found that despite all the effort that I put into optimising performance on the back-end (in Grav) and also in Cloudflare as my front-end/CDN, that any time a request couldn’t be served by Cloudflare at the edge and had to go back to the Azure origin, a D1 development webapp shared instance just couldn’t cut it - especially if it was the first request to hit origin in a while.

All this was to be expected of course - it’s a non-production, shared instance - but my mission was to stretch that as far as I could and minimise cost.

So, what now?

Stepping back for a minute, the reason for the lacklustre performance on a low-powered webapp, funnily enough, is that despite Grav being very efficient for a Content Management System (CMS), it’s still a CMS and by its very nature, it does require a bit of oomph.

I started to look into running a Static Website in Azure Storage - but I didn’t want to have to write HTML code - I still wanted to just write markdown - it’s a fantastic way to write web content.

So, I started to look into static website generators, and in particular, Hugo is getting a lot of positive attention recently.

To quote Micheal Colhoun on twitter:

This is the way to go. When we created CMS systems in the 1990, we didn't design it in a time where storage and deployment infrastructure was cheaper than computer time.

— Micheal Colhoun (@michealcolhoun) October 21, 2019

And that’s the nub of it - I can either choose to spend more so that there is sufficient performance, or I can choose to run a static site, on azure storage.

What is the cost difference?

On the topic of cost - my D1 webapp instance costs me around £6.89+VAT per month.

A static site in an azure storage account has cost me around £0.03+VAT per month while I’ve been testing it - that’s an almost 96% reduction in cost - and a static site is easier to cache in Cloudflare (or any other CDN) than dynamic content from a CMS.

But what about security?

Well - you may think that in order to host a website in Azure Storage that you’d need to have to the blob container be public, right? I mean otherwise how will people see your files (the website)?

Wrong as it turns out - it simply means that accessing the content using the URL below (or any other file under the $web container) is prohibited.

https://storage_account_name.blob.core.windows.net/$web/index.html

But if you use the static website url - for example the URL below then you have full access to the web resources through that route - so making it private in essence just forces the use of tbe web.core URL rather than the blob.core URL.

https://storage_account_name.z6.web.core.windows.net/index.html

In addition, as you’ll have seen in my blog post Grav in Azure part 6 - Optimising Grav for performance and security, I like my sites to follow security best practice as much as possible, and so that means using http response headers like Content-Security-Policy and others.

Now, I could go learn how to do this with Hugo (as there won’t be a web.config file for my static site - even if I put one in the container - there is nothing being done server-side, it’s static content with all rendering work done in your browser).

But I decided I wanted a consistent experience no matter the underlying website solution I use (Grav on App Service, Hugo on Static Website or something else down the road that I want to try).

So, I wanted to front-end the static website with a Function Proxy (as previously blogged about).

This gives me a lot more capabilities around SSL/TLS, custom domain etc and as I’m a fan of Azure Functions anyway, I was always going to want to front-end my site this way. It also means that I can steer traffic away from accessing the storage account directly.

But what about performance any time you go back to the origin?

I’ve been running a website for a local community group for a while and just like my blog, it runs on Grav.

So, I converted my content into the format needed for Hugo (it’s still markdown, but there are some formatting changes required - which I will blog about later - powershell to the rescue!).

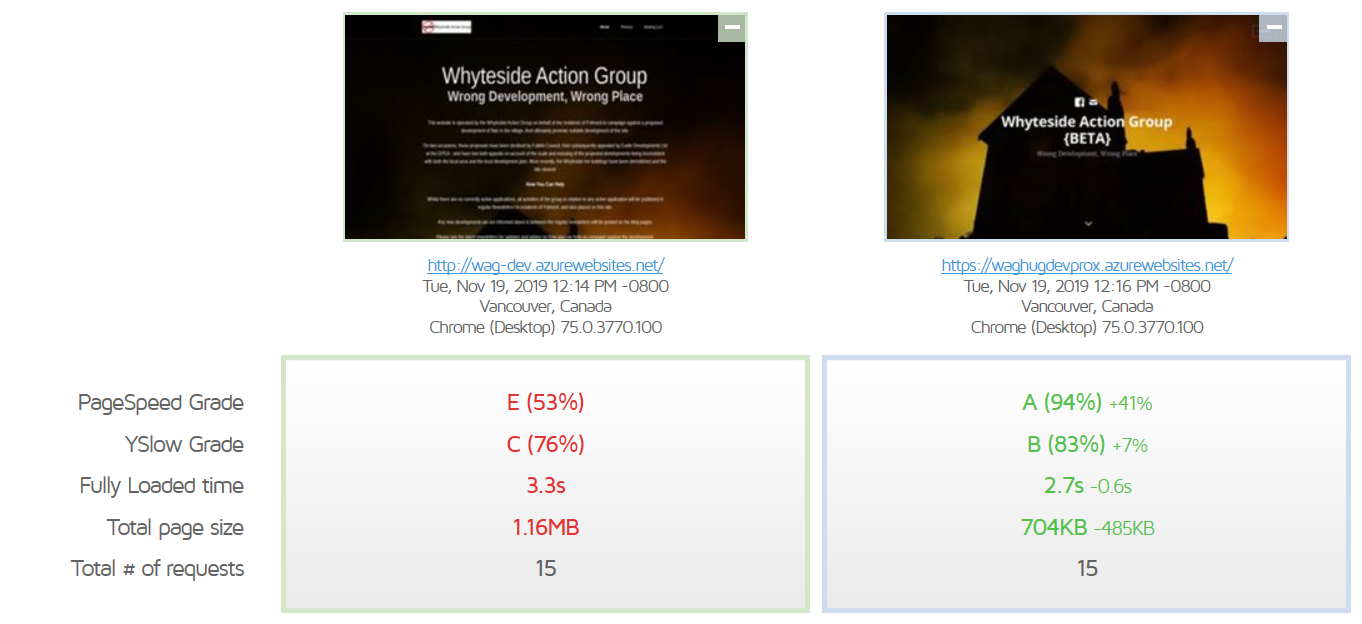

Using GTMetrix, I compared the non-prod version of that site (no CDN, F1 Web App) with the Azure Function Proxy front-ended static website (again, accessing directly rather than via Cloudflare) and the results are astonishing:

Remember this is front-ended by Cloudflare and their CDN in prod - and static content is much much easier to cache - so we will hit the origin far less frequently anyway - but anytime we do now hit the origin it will be far more responsive and less impact on the visitor.

There is the small issue that because I’m using a Function App on a Consumption plan, there can be cold-start issues (which may or may not be any different to the lag on a shared F1 WebApp - something I still need to measure). I have some thoughts around improving that and may well blog about it when I have a solution - but for now we have an amazing improvement in performance, cacheability, and a cost reduction of 96% compared to current solution (ok, maybe add on a few pennies a month for Function Proxy executions - unless my website suddenly becomes the most popular on the planet it’s not going to make a dent at all!).

Very good, but what about your blogging workflow?

Now, because I’m using a static website in Azure storage - I can’t publish quite so easily, especially not on the go - I won’t always be writing on a laptop with access to Azure Storage Explorer.

But - this has given me reason (after reading a few blogs and microsoft docs) to try Azure DevOps so that when I push content to a git repository, it triggers a build of the hugo site and uploads the new content to the storage account - giving me the same old flow of using VSCode or Git2Go to publish.

I have that working pretty well - you guessed it, that will be the subject of a blog post in its own right.

This is running on a beta version of the local community website that I run and will do so a little while longer before I convert my blog to to use the same setup - but I haven’t yet come across a reason not to do so - it’s all looking very promising!

Again - this wouldn’t work for every scenario - but for a small blog - it’s a way to deliver a lot for next to nothing in terms of outlay - which is ideal for many.

Look out for more detail on some aspects of the solution and the migration from Grav to Hugo in future posts.

As ever, thanks for reading and feel free to leave comments below.