Hero Image generated by ChatGPT

This is a personal blog and all content therein is my personal opinion and not that of my employer.

Enjoying the content? If you value the time, effort, and resources invested in creating it, please consider supporting me on Ko-fi.

Introduction

In this post, I’m going to talk about an issue I spotted recently within Microsoft Azure.

In my last post I talked about the VaultRecon issue that I discovered in Microsoft Azure.

I also hinted that the issue doesn’t just exist in the Microsoft.KeyVault provider…

If you haven’t read my last post, please read it first - there are concepts explained there that are assumed knowledge in this post.

What happened?

As I mentioned in that post, I found it unlikely that Microsoft.KeyVault was the only affected provider and so I decided to check other providers in the same way.

I also mentioned in that post that just knowing the metadata of the secrets in your Key Vaults could be useful to an attacker in focussing future efforts where trying to find another attack path to a secret might be worth the effort .

Enter Azure Logic Apps

An obvious next target for me was Azure Logic Apps which Microsoft describes as:

“a cloud platform where you can create and run automated workflows with little to no code. By using the visual designer and selecting from prebuilt operations, you can quickly build a workflow that integrates and manages your apps, data, services, and systems.”

A fan of them myself, I knew that one common action in Logic App workflows is to integrate with a KeyVault to retrieve and/or update a secret object.

One thing I’d never really explored though was their RBAC model - operating in my own tenant I tend to be owner of anything I deploy anyway.

So, I deployed a Logic App with the following properties:

- Consumption SKU e.g. multi-tenanted with no other supporting infrastructure in my tenant

- System Assigned Managed Identity created for the Logic App

- Assign the Logic App Secret Reader role in one of my existing Key Vaults to the managed identity

- Build a simple workflow with a

Get_secret_versionbuilt-in action that retrieves a particular secret from the vault

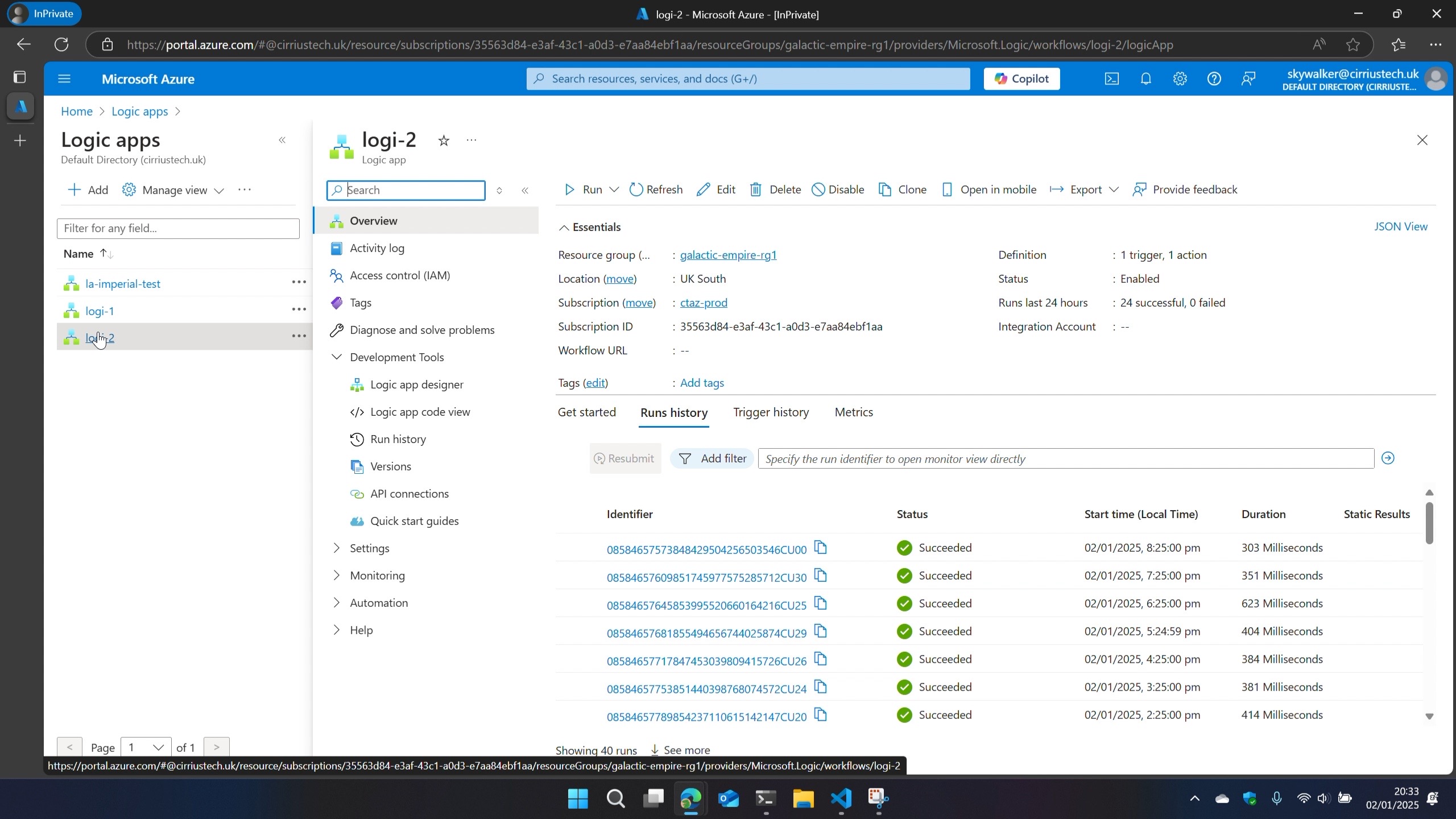

I ran it a few times to check it worked.

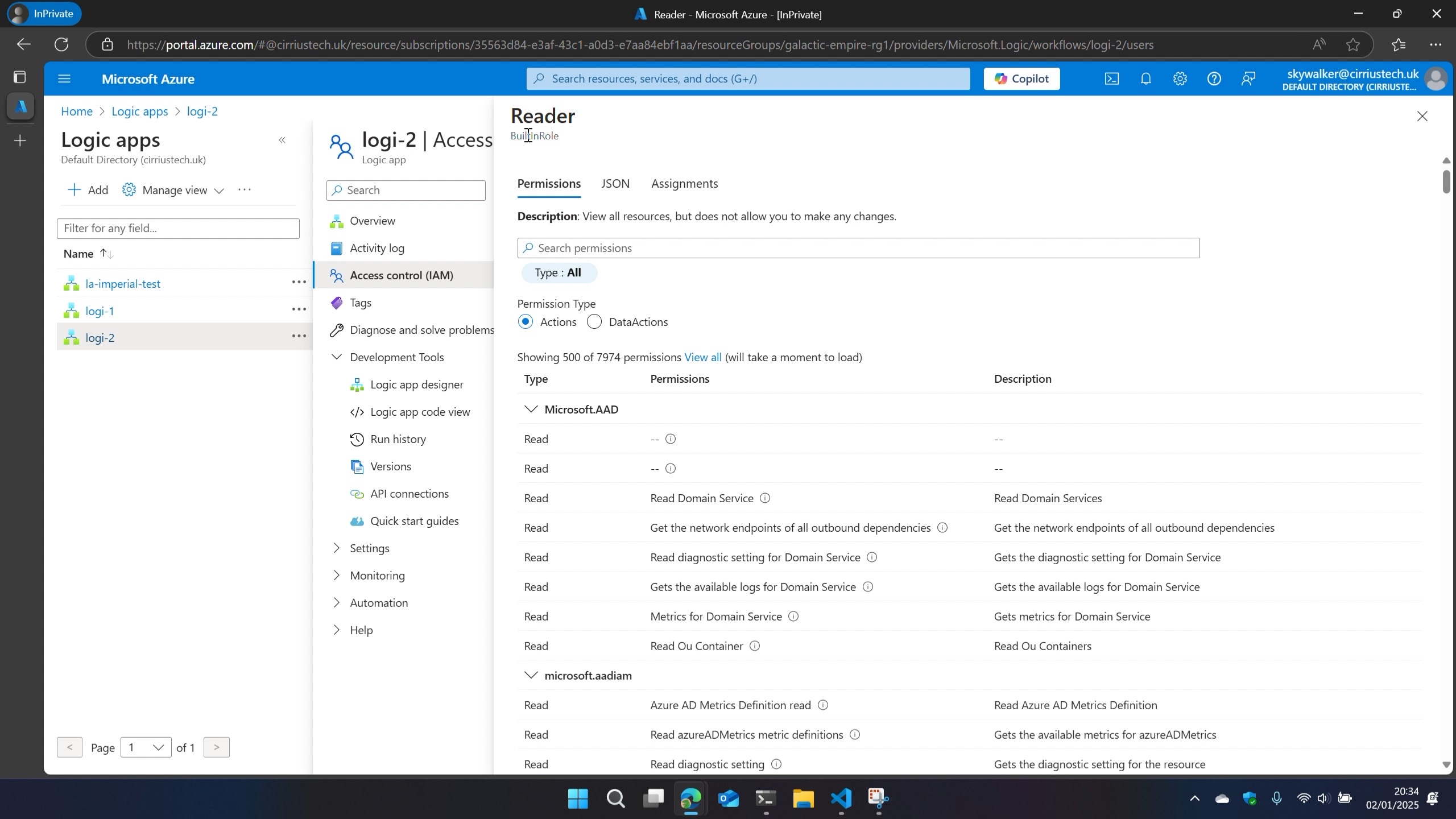

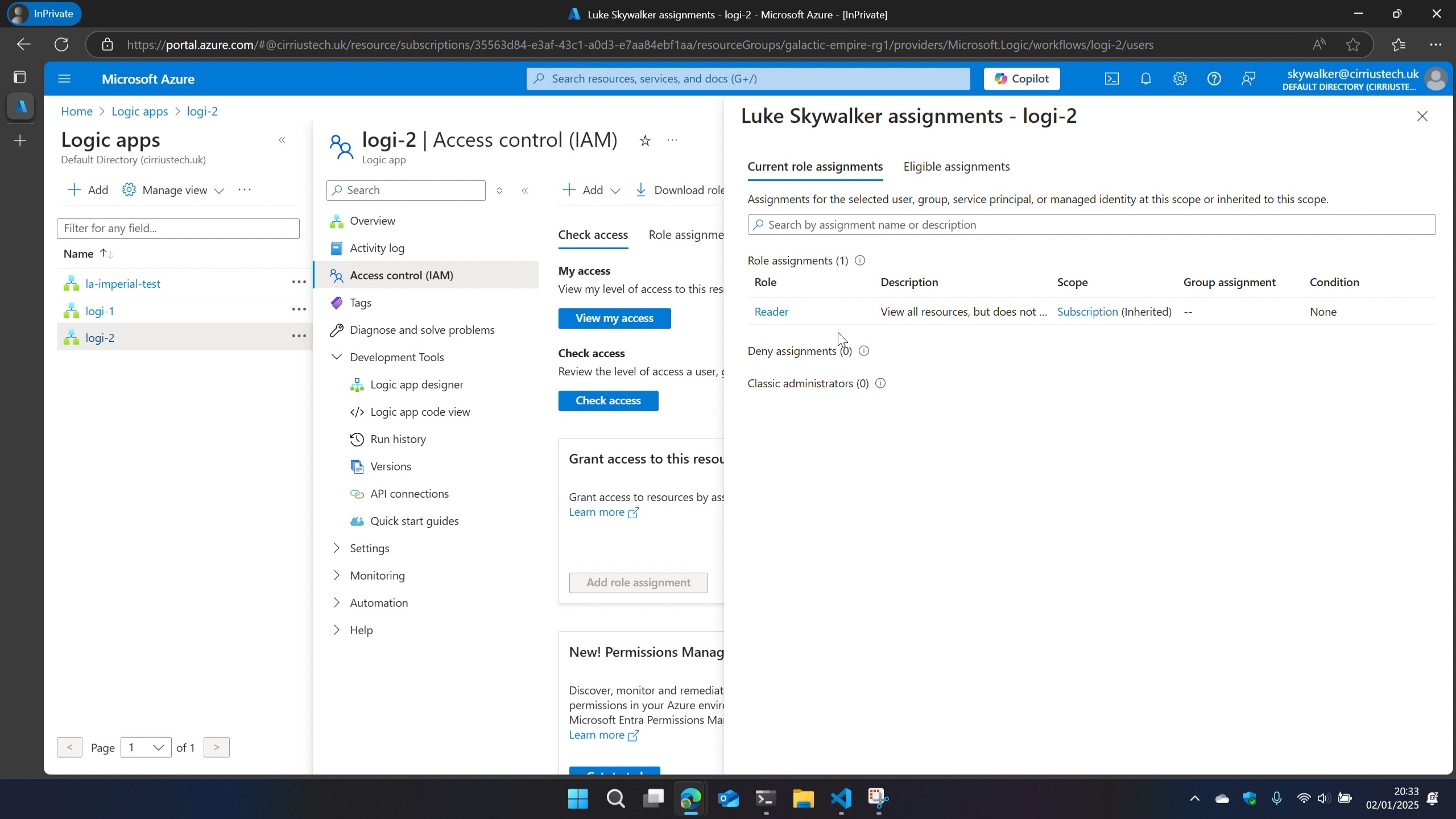

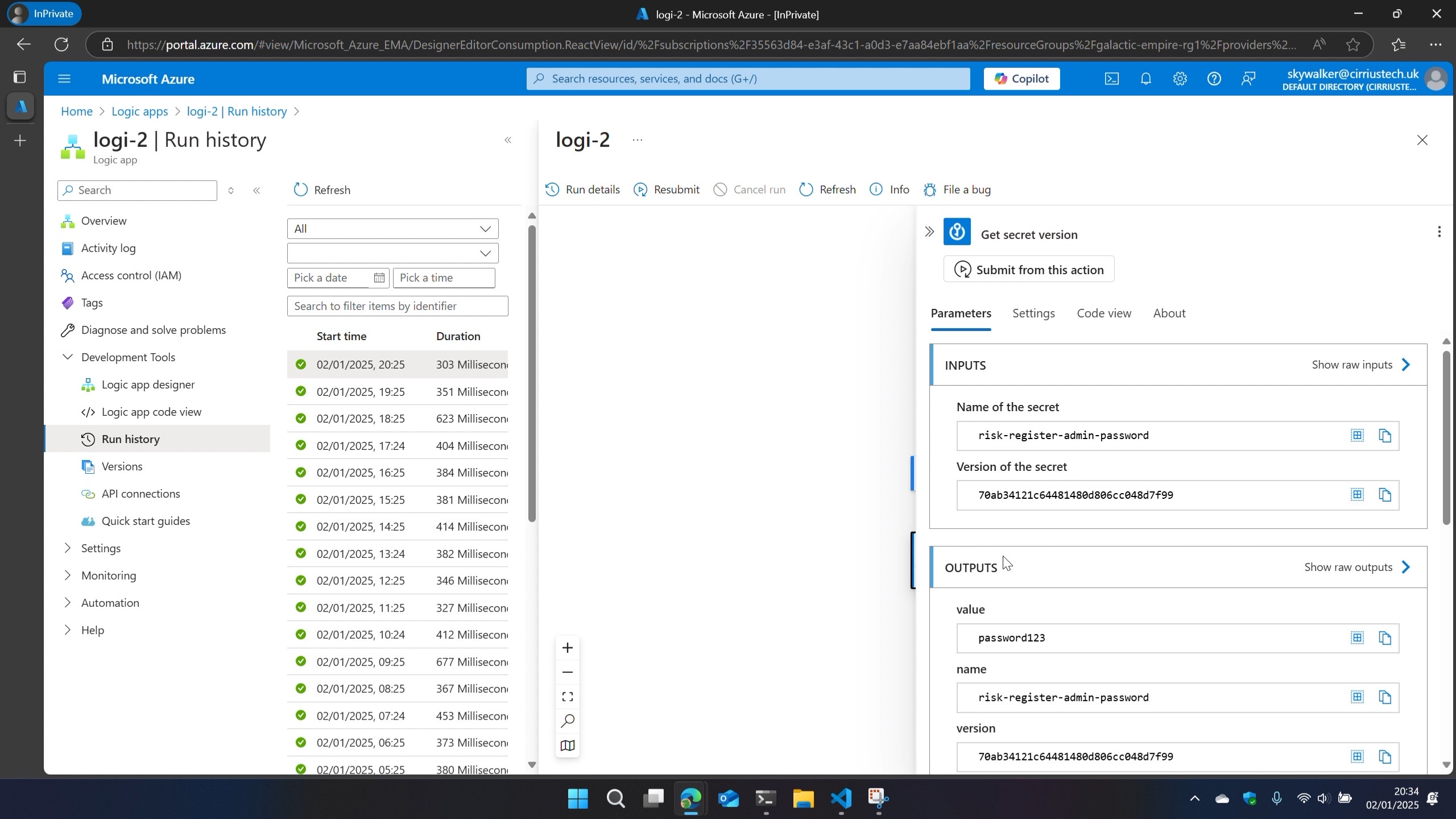

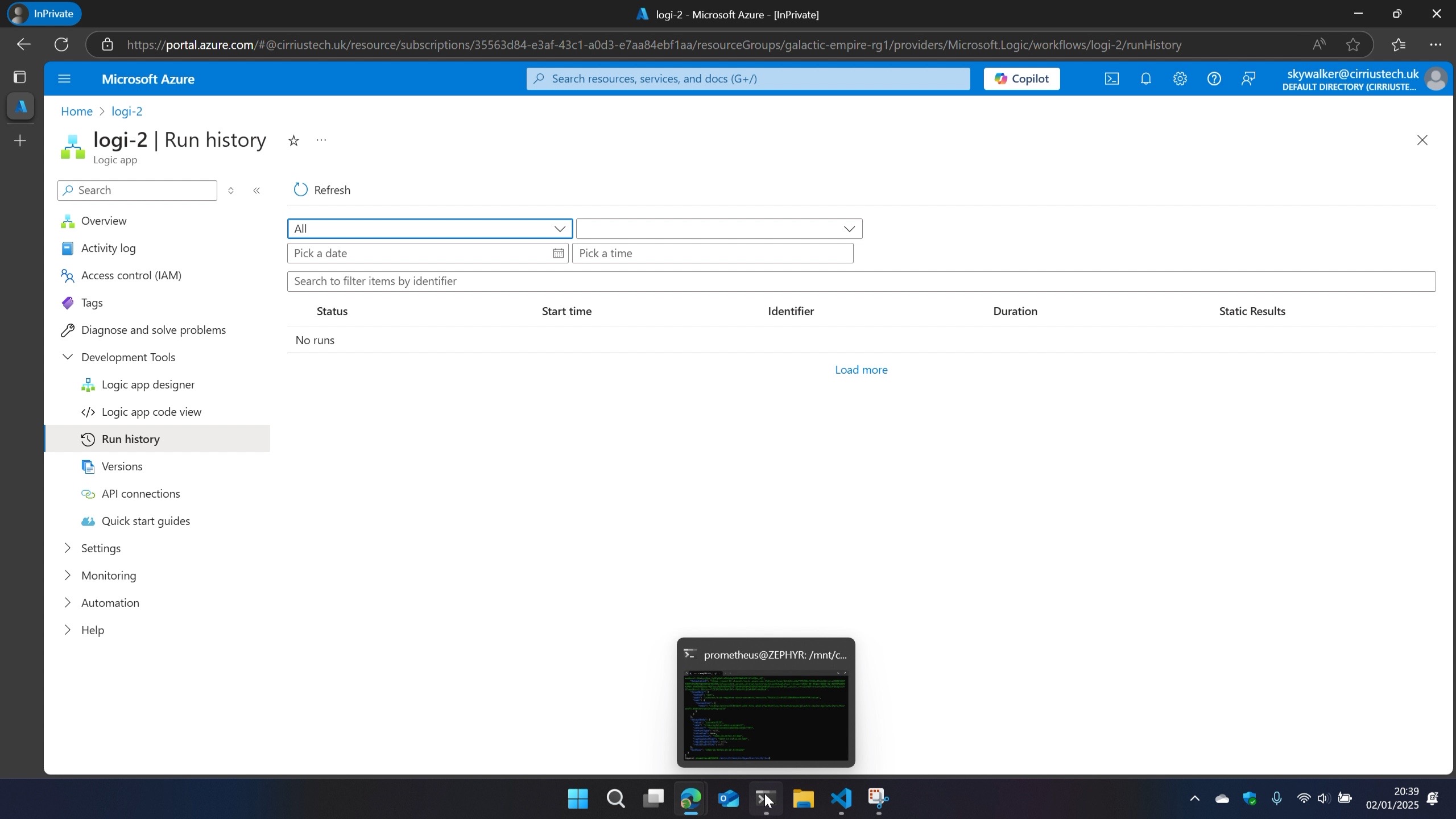

I then logged out of my admin user in the Azure Portal and logged in with a user that only has the Reader built-in Azure RBAC role - no Logic App specific roles.

To my surprise I was still able to view all the run histories and their outputs even as reader (again, a failure of the RBAC model to isolate metadata and configuration data from actual data).

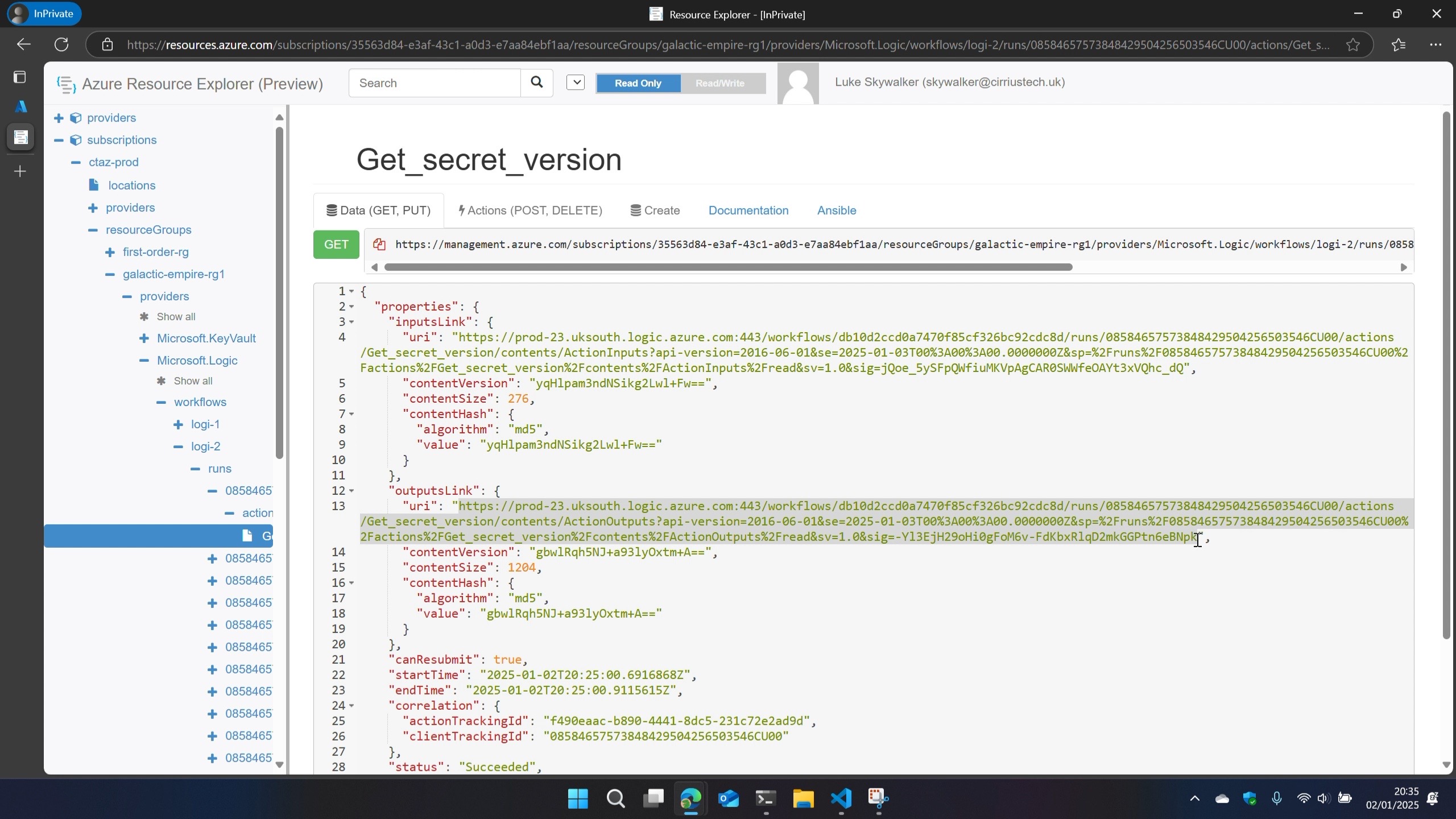

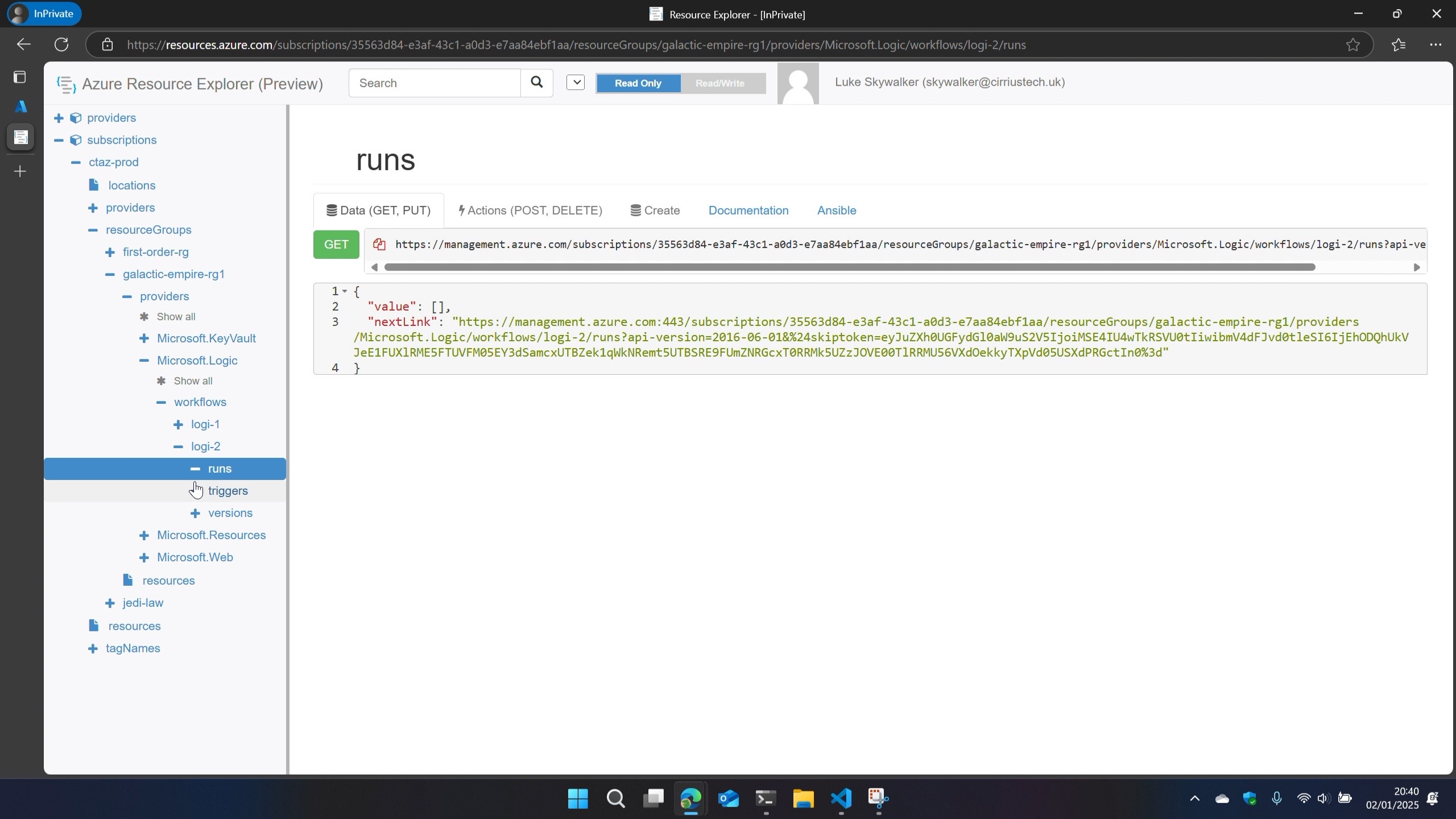

Back in **Azure Resource Explorer **, I walked the config of the resource I was interested in, selecting and expanding subscriptions > resourceGroups > resourceGroupName > providers > Microsoft.Logic > workflows > workflowName > runs > runId > actions I noticed there were child nodes named as the action name(s) in the workflow.

In here I could see metadata about those actions during that run including content hashes.

What I wasn’t expecting to see right there in the CONTROL PLANE were SAS URLs…

SAS URLs - what’s the big deal?

A SAS url is described by Microsoft as:

A shared access signature (SAS) provides secure delegated access to resources … With a SAS, you have granular control over how a client can access your data. For example: What resources the client may access. What permissions they have to those resources. How long the SAS is valid.

In essence then they are PreAuthenticated links that you can share with someone to give them granular, time-bound access to a resource .

I’d come across these before with Azure Storage Accounts as a way to share access to a file (or full storage blob) with someone without giving them permission permanently and without them even having to have a user identity in your tenant.

While they can be useful in some scenarios, they are fraught with danger - Microsoft themselves even state:

“If a SAS is leaked, it can be used by anyone who obtains it, which can potentially compromise your storage account.”

Although a SAS can be secured against an identity in Entra, they can be and commonly are secured with one of the account keys of the resource being accessed e.g. storage account.

Microsoft therefore provides some of the following guidance around SAS usage:

- Use a user delegation SAS when possible. A user delegation SAS provides superior security to a service SAS or an account SAS. A user delegation SAS is secured with Microsoft Entra credentials, so that you do not need to store your account key with your code.

- Have a revocation plan in place for a SAS. Make sure you are prepared to respond if a SAS is compromised.

- Create a stored access policy for a service SAS. Stored access policies give you the option to revoke permissions for a service SAS without having to regenerate the storage account keys.

- Use near-term expiration times on an ad hoc SAS service SAS or account SAS. In this way, even if a SAS is compromised, it’s valid only for a short time.

- There is no direct way to identify which clients have accessed a resource.

- Use Azure Monitor and Azure Storage logs to monitor your application.

- Configure a SAS expiration policy for the storage account. Best practices recommend that you limit the interval for a SAS in case it is compromised. By setting a SAS expiration policy for your storage accounts, you can provide a recommended upper expiration limit when a user creates a service SAS or an account SAS.

It seems then that SAS urls are not just used with storage accounts (or, perhaps more likely with this being a PaaS service, there is a Microsoft managed storage account in their tenant that you can’t see).

The Issue

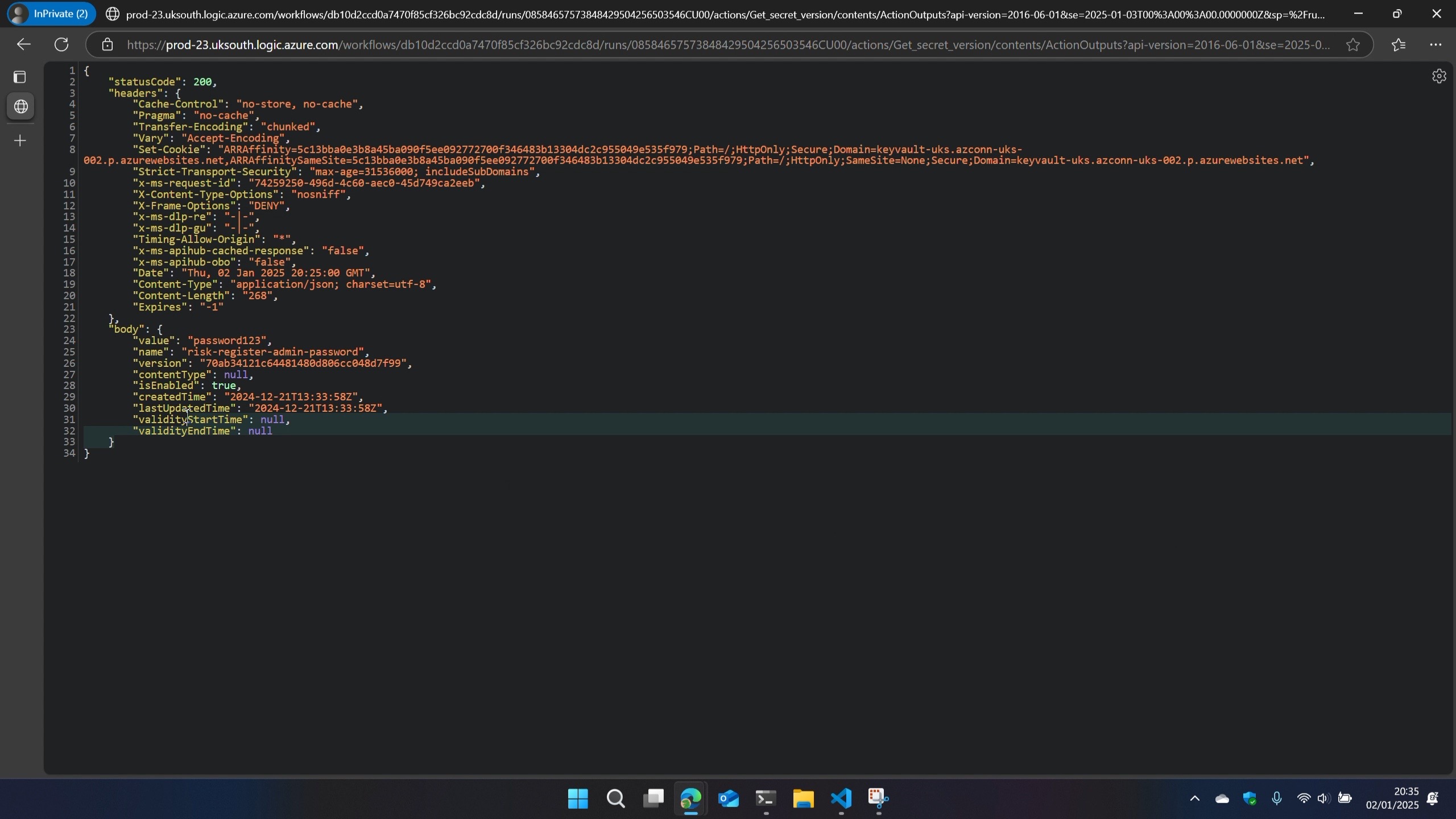

If you copy the inputsLink or outputsLink url from Azure Resource Explorer and paste it into your browser, you get the full input or output of the action that you’d see in the run history via Azure Portal

.

So that’s DATA access, exposed via the control plane.

But wait, I hear you say… that wasn’t actual data in the control plane, it was a link to the data in the data plane…

A distinction without a difference when that url is a preauthenticated url which can be opened by anyone from anywhere, with no controls…

This could potentially be called an Insecure Direct Object Reference and in any case is an insecure default configuration.

Microsoft’s Response

I raised this with Microsoft Security Response Center and the timeline is as follows:

Timeline

-

19th December 2024 @ 14:22 - Raised a submission to MSRC -

VULN-143948: Azure Management API Exposes KeyVault Secrets Metadata in breach of RBAC -

23rd December 2024 @ 10:34 - Status changed to Review/Repro.

-

13th January 2025 @ 09:44 - Response from MSRC:

Thank you again for submitting this issue to Microsoft. We determined that the reported behavior is not a vulnerability since the domain seen in the headers is not related to any key Vaults that Microsoft owns but is the domain of the Web App that is serving the connector requests. Additionally, If customer wants to secure the secret inputs and outputs, it can be secured in the run histories as mentioned in the below documentation: https://learn.microsoft.com/en-us/azure/logic-apps/logic-apps-securing-a-logic-app?tabs=azure-portal#secure-data-in-run-history-by-using-obfuscation. This case is now closed. We thank you for your cooperation and look forward to working with you again in the future.

-

13th January 2025 @ 11:16 - Reply from me to MSRC:

I feel that this is a mistake – whilst I recognised after I raised it that they domain mentioned was in fact the API Connection for the Vault and therefore in my tenant, I do feel that being able to access data from the control plane is a poor security design.

It requires no KeyVault or Logic App role based permissions and only Reader.

Whilst this is accessible from the management plane with such low permissions, and because it is exposed via a SAS URI which means it is accessible from anywhere without additional authentication, this is bad.

Yes, you can mitigate by use of Secure Inputs/Outputs and/or IP restrictions on content access – neither of those are default configuration and so Microsoft’s default configuration of these apps/products is woefully insecure and represents both an RBAC failure and a failure of separation of management plane and data plane.

In addition, this enumeration is not logged and so can’t be detected if a customer feels (as I do) that it should be monitored.

-

29th January 2025 @ 06:30 - Reply from MSRC:

We provide comprehensive documentation detailing the procedures for enabling secure settings for customers. The SAS URL generated from the Get Runs request is time-sensitive, and users must possess reader permissions to access the shared link. Furthermore, activity logs can be enabled to monitor GET/Read actions on the resources. We are also in the process of implementing default configurations to enhance secure operations in the future. In the meanwhile, customer should use the additional documented steps wherever needed based on their scenarios.

-

29th January 2025 @ 07:23 - Reply from me to MSRC:

I would counter that it’s not possible to log access of the SAS URI in my testing. With Full diagnostic logging enabled on both the key vault and the logic app, there is next to no logs against the provider Microsoft.Logic. Logs of Microsoft.KeyVault do show the secret access at the time of the logic app workflow run but don’t record subsequent accesses via run history and the SAS URI emitted in the run history. This therefore means it is not possible to write and form of detection for this stealthy secret exfiltration. And while the SAS URI is time sensitive (in practice a new URI seems to be generated hourly and the URI is valid for a little over 4 hours - I find that the URI signedExpiry value has an expiry time which is at zero minutes and zero seconds after the hour that is 4 hours from the current hour but that it can still be valid up to 10 minutes after that time due to what I presume is eventual consistency which is not uncommon in cloud services)

-

29th January 2025 @ 09:30 - I challenged MSRC:

“Although you say this isnt a vulnerability, you are also saying you will make changes going forward. This therefore to me says, it is a vulnerability.”

-

5th February 2025 @ 05:00 - Response from MSRC:

After internal verification, we would like to clarify that the logs are available solely for internal use and not accessible to customers. Furthermore, the engineering team is prioritizing efforts to enhance security; however, we do not have specific timelines at this moment.

-

8th February 2025 @ 08:51 - Final response from MSRC:

“We appreciate your patience and understanding. We understand that the assessment results may have been disappointing for you. We are truly grateful for the time and effort you invested in reaching out to us and sharing your report. Your feedback is valuable to us, and we deeply value customers and security custodians like you who help us enhance our offerings.

The specific behavior mentioned in your report aligns with the expected functionality of the product. We already have an existing public documentation to help customers configure their systems based on their custom requirements. The documentation guides customers through setting specific parameters to achieve the desired configuration.

At Microsoft, we prioritize security for our customers, and we continue to look for ways to enhance the security of our products and services. We are here to support you and welcome any further questions or feedback you may have. Please continue your research, and we look forward to your future contributions.

We are grateful for your continued support and look forward to your future contributions.”

My Thoughts On The Microsoft Response

I personally feel that there is still a lack of recognition that this is a breach of control plane and data plane isolation - data access is granted from the control plane with no data plane specific permissions.

In addition, it’s an insecure by default configuration - Microsoft are putting all the onus on the customer to be secure, to accumulate technical debt etc so that Microsoft don’t have to live up to their duties as part of the shared responsibility model.

The fact that Microsoft dismiss as being by design and therefore not a vulnerability, doesn’t sit well with me.

Why Does This Matter?

- It’s not widely known/advertised

- It does not conform to a least-privilege model - managing the resource should never allow data access

- Exposing this data allows an attacker to silently exfiltrate data from every Logic App in the tenant if they have Reader (or an equivalent role with e.g.

*/Readas a defined permission) - That data may not just include operational data but also customer data and secrets/credentials

- One of the most common cloud compromise vectors is credential theft - therefore allowing an attacker (whether malicious insider or a criminal using a stolen credential) to exfiltrate any secret may be the foothold and lateral movement opportunity they need to takeover critical infrastructure

- YOU CANNOT DETECT THIS ACTIVITY

- YOU CANNOT DETECT THIS ACTIVITY

I played around with a few ways of trying to remediate the risk:

Blocking Access to Azure Resource Explorer

You could block access to Azure Resource Explorer and it’s pretty easy - since I don’t have any enterprise tooling/EDR on my personal machine I have tested just by adding an entry to the hosts file on my Windows machine using the following Powershell one-liner to redirect it to my local machine:

Add-Content -Path C:\Windows\System32\drivers\etc\hosts -Value "127.0.0.1 resources.azure.com"

This will leave the bottom half of your hosts file looking something like this (You need to have local Administrator privileges to edit this file - I done this from an elevated powershell session and also used that to open the file in notepad afterwards to confirm the change - notepad C:\Windows\System32\drivers\etc\hosts):

# localhost name resolution is handled within DNS itself.

# 127.0.0.1 localhost

# ::1 localhost

127.0.0.1 view-localhost # view localhost server

127.0.0.1 resources.azure.com

This does block access to the Azure Resource Explorer:

However, this doesn’t achieve much since, as I mentioned way back at the start, it’s just an API browser front-end for the management.azure.com management API.

So let’s block that then? (If you already know what’s gonna happen, shh…)

Blocking Access to management.azure.com

This time I ran the following powershell one-liner to also redirect management.azure.com to my local machine:

Add-Content -Path C:\Windows\System32\drivers\etc\hosts -Value "127.0.0.1 management.azure.com"

# localhost name resolution is handled within DNS itself.

# 127.0.0.1 localhost

# ::1 localhost

127.0.0.1 view-localhost # view localhost server

127.0.0.1 management.azure.com

Unfortunately - this API is fundamental to Azure - if you block it you will break the portal, you will break ARM deployments, Terraform deployments of azure resources and Az Powershell/Az CLI .

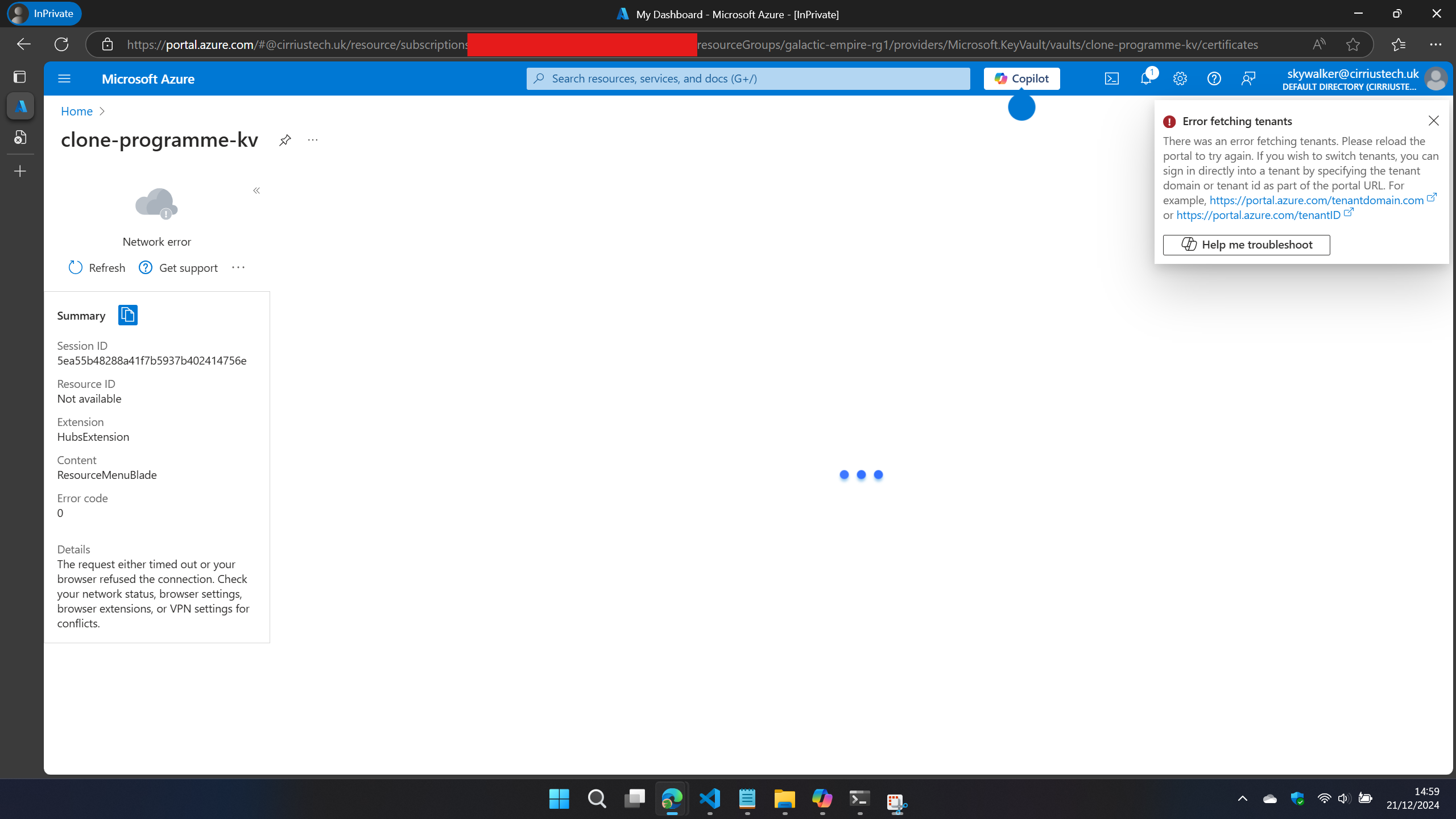

Don’t believe me? Lets reload the Azure portal after adding this hosts file entry:

Not good huh?!

Azure Resource Explorer - End Of Life Announcement

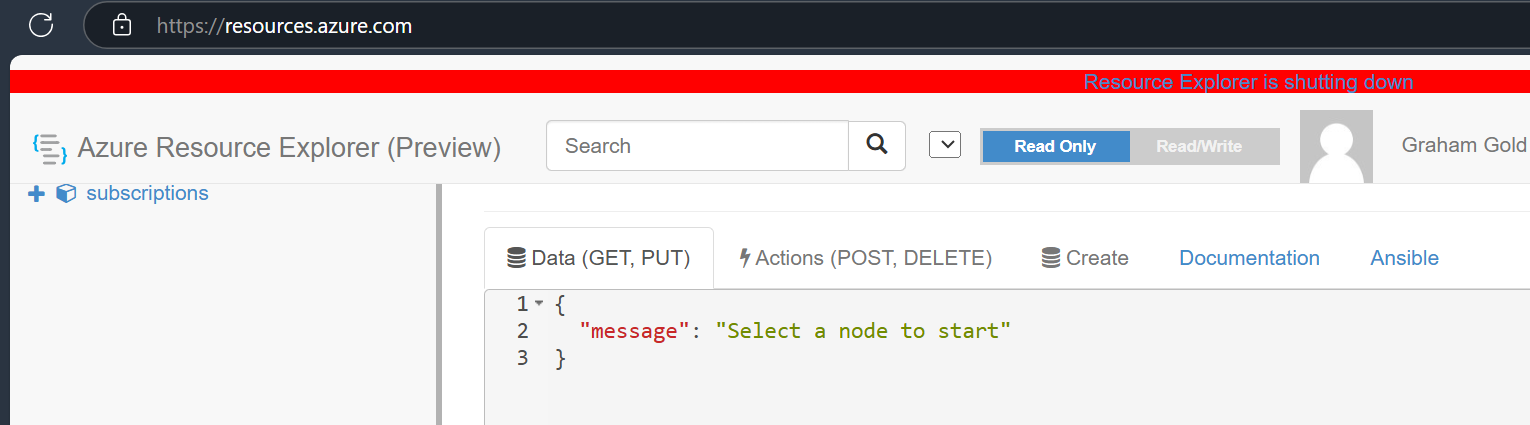

On the 13th January 2025, I noticed something new:

A banner at the top of Azure Resource Explorer stating that “Resource Explorer is shutting down”…

Although the timing seems suspicious, the link takes you to a Github issue raised on June 28th 2024 stating:

Azure Resource Explorer - resources.azure.com will be shutting down soon. We recommend alternatives such as https://github.com/projectkudu/ARMClient or the Azure Portal for managing Azure resources.

The github repo will still be available.

Thank you.

Minimising The Risk

There are things that we can do which will reduce our risk a little.

Attack Surface Reduction - Who Really Needs Reader?

Review your Azure RBAC permissions to make sure that the principals (human and non-human alike) who have the Reader role (or a role that also has */read or similar) are kept to as few as you need in order for your business to operate

Attack Surface Reduction - Tighten The Scope

Review your Azure RBAC permissions to make sure that the scope of the above permissions is as tight as possible - don’t grant those permissions at Management Group, Subscription or even Resource Group level if you don’t need to - allow it only at resource level if at all possible

Attack Surface Reduction - Reduce Standing Privilege

If you have an Microsoft Entra P2 or M365 E5 licence you can leverage Entra Privileged Identity Management to reduce standing privilege by requiring those that do need the access to have to active a PIM assignment that grants temporary access (time-bound).

You CANNOT use on-premise Active Directory groups synced with Entra

You may be familiar with PIM in terms if Just-In-Time(JIT) access to Entra Roles but it has also had the ability to do the same with Groups for quite some time now (originally known as Privileged Access Groups but now called PIM For Groups.

A really important stipulation here - You CANNOT use on-premise Active Directory groups synced with Entra - the group must either of:

- Microsoft Entra Security Group

- M365 Group

Assigning the role to members of a group managed by PIM (with an Eligible rather than Permanent assignment means that by default nobody is in the group unless and until they successfully active and elevate into the group.

You can require approval from other people upon activation although I would caution against this for lesser privileged and more frequently used roles or groups as it can lead to approval fatigue.

You can require MFA upon activation but remember that this doesn’t guarantee the user is presented with an MFA challenge on every activation request - if the user has a valid authentication token with a valid MFA claim in it, they won’t be re-MFAed before their token is due to expire.

You can use authenticationContexts instead to require step-up authentication if you need this - e.g. require a different form of authentication method from the users’ default method (require FIDO2 for example even if there is already a valid MFA claim from the Authenticator app) .

Another caveat - you would now have to monitor for any abuse of the PIM config to escalate privilege.

Custom Roles

Whilst I believe this a fundamental flaw in Microsoft RBAC in Azure that they themselves should fix, there is a workaround I’ve tested that entirely mitigates this vulnerability.

You could create a new custom role, cloned from the Reader built-in role but crucially with some *notActions added as shown in the role definition below:

{

"id": "/subscriptions/35563d84-e3af-43c1-a0d3-e7aa84ebf1aa/providers/Microsoft.Authorization/roleDefinitions/6025e984-fbcb-407f-966f-7ff43eebef84",

"properties": {

"roleName": "Global Control Plane Reader",

"description": "",

"assignableScopes": [

"/subscriptions/35563d84-e3af-43c1-a0d3-e7aa84ebf1aa"

],

"permissions": [

{

"actions": [

"*/read"

],

"notActions": [

"Microsoft.OperationalInsights/workspaces/query/LogicAppWorkflowRuntime/read",

"Microsoft.Logic/workflows/runs/actions/repetitions/requestHistories/read",

"Microsoft.Logic/workflows/runs/actions/requestHistories/read",

"Microsoft.Logic/workflows/triggers/histories/read",

"Microsoft.Logic/workflows/runs/read",

"Microsoft.Logic/workflows/runs/actions/read",

"Microsoft.Logic/workflows/runs/actions/repetitions/read",

"Microsoft.Logic/workflows/runs/actions/repetitions/listExpressionTraces/action",

"Microsoft.Logic/workflows/runs/actions/scoperepetitions/read"

],

"dataActions": [],

"notDataActions": []

}

]

}

}

This works on the basis that because of the failure by Microsoft to prevent data access from the control plane, we need to subvert the wildcard permission in the Reader built-in role by explicitly blocking permissions that grant data access in the data plane.

Whilst this does work, it shifts the pain from Microsoft as the Cloud Service Provider to you as the customer.

You’d be letting them off the hook for failing to uphold their side of the shared responsibility model whilst the burden of working out what permissions to block, and keeping that up to date as the cloud services evolve has now fallen on you as you maintain custom roles, not Microsoft.

IP Restrictions

Azure Logic Apps have a capability (off by default) that enables limiting access to workflows to specified IP Addresses/Ranges: https://learn.microsoft.com/en-us/azure/logic-apps/logic-apps-securing-a-logic-app?tabs=azure-portal#restrict-ip

This option helps you secure access to run history based on the requests from a specific IP address range. Specifically, there are 2 specific types of IP restrictions available:

- Restrict triggering of workflows to specified IP addresses/IP address ranges - this won’t help with this issue, it just controls where you can trigger the workflow runs from.

- Restrict access to content (e.g. workflow run history) to specified IP addresses/IP address ranges - This is what you need to restrict external access - that way even if someone were to share the SAS URLs outside of your organisation, they would be prevented from retrieving the contents of the referenced storage blob

Obfuscation

Azure Logic Apps have a capability(off by default) that enables hiding inputs and outputs in actions within Logic App workflows: https://learn.microsoft.com/en-us/azure/logic-apps/logic-apps-securing-a-logic-app?tabs=azure-portal#obfuscate

While this does block access to the inputs/outputs specified both in the Azure Portal (data plane) and via the management APIs/Azure Resource Explorer (control/management plane), there are some caveats to be aware of:

- You have to enable for each workflow action that you want it enabled on and in each workflow you have.

- There may be times where you don’t want secure outputs enabled e.g. you want the output of a workflow action to be visible

- It is not fully supported by all action types (see table below)

| Secure Inputs - Unsupported | Secure Outputs - Unsupported |

|---|---|

|

Append to array variable Append to string variable Decrement variable For each If Increment variable Initialize variable Recurrence Scope Set variable Switch Terminate Until |

Append to array variable Append to string variable Compose Decrement variable For each If Increment variable Initialize variable Parse JSON Recurrence Response Scope Set variable Switch Terminate Until Wait |

One Last Thing - Exploitation

Now that I’ve walked you through this issue that I’ve named SilentReaper, I have something else to share…

I’ve created an exploit script that will authenticate to your tenant (you should authenticate as a user with Reader as their only Entra or Azure role) and walk through every logic app in every subscription in your tenant and dump out the inputs and outputs of every action in every run history for every workflow you have. (Remember I talked in my last post about attack paths…)

I call it Az-Skywalker and it is available here: https://github.com/Az-Skywalker/Az-Skywalker

Documentation and sample outputs are are covered in tbe README and a video demonstration is available on my YouTube channel here:

Acknowledgements & Prior Work

Summary - All You Need Is Read(er)

The fact that these issues arise from the Control Plane or Management Plane of the cloud provider APIs and represent , in my personal opinion, failures in RBAC enforcement and control plane/data plane separation adds a deeper dimension to their impact. These are not just generic security flaws; they reflect systemic architectural weaknesses in Microsoft’s cloud infrastructure.

And remember, All You Need Is Read

As ever, thanks for reading and feel free to leave comments down below!